Photo by Mark Harkin, via Flickr

Virgin Atlantic Airways recently partnered with top-notch researchers at the London School of Economics and the University of Chicago in an attempt to get their pilots to save jet fuel (and the planet) via nudging.

The study was a success, and it got nice coverage from the Washington Post (“Virgin Atlantic just used behavioral science to ‘nudge’ its pilots into using less fuel. It worked.“) This led to a series of tweets, which I’ve unscientifically sampled below:

Virgin Atlantic just used behavioral science to “nudge” its pilots into saving lots of fuel https://t.co/OzZruShjAO

— Washington Post (@washingtonpost) June 22, 2016

Virgin Atlantic saved £3.3 million on fuel costs just by mailing pilots info about their fuel usage. https://t.co/Mh2J0VbEqQ

— Karen McGrane (@karenmcgrane) July 1, 2016

Behavioral science at work in the airline industry! Check out the detail in the article below. https://t.co/r9qvB0Apww

— Virginia ABA (@VirginiaABA) June 27, 2016

How Virgin used behavioural economics to nudge pilots to save fuel – nearly 7000 metric tons of the stuff – https://t.co/ZKt7TRAqtc

— #ogilvychangeNZ (@ogilvychangeNZ) June 26, 2016

There’s good reason for the back slapping. The study used a randomized controlled design, which is considered the gold standard for these experiments. The researchers did their best to track the outcomes of interest (fuel savings and carbon emissions avoided) rather than intermediate ones. And it was all done in a real-world setting rather than the laboratory.

But despite all the hoopla, the isolated effects of the nudging were relatively modest; the majority of the fuel savings accrued to all pilots in the study… even those who weren’t nudged.

Let’s break it down. The researchers randomized a bunch (335, to be exact) of VirginAtlantic pilots to one of four arms (i.e., study groups), each of which were treated in a slightly different fashion:

- Control: This group received no nudging.

- Information: These pilots got monthly reports by snail mail showing how well they’d done on three key measures of fuel use.

- Info + Targets: These pilots got the same reports as group #2, augmented with whether or not they’d achieved personalized targets for the key fuel use measures.

- Info + Targets + Charity: This group got everything that the pilots in group #2 got, but were able to make a donation to charity when they achieved their personalized targets each month.

Groups 2 through 4 are intervention arms because the researchers intervened on them in a way different usual practice. Group 1 is the control arm. By comparing how the subjects in the other groups did relative to those in Group 1 (and because pilots were assigned to groups randomly), we can better understand whether any difference was truly due to the intervention.

Pilots’ fuel use behaviors were measured for the 13 months prior to being assigned randomly to one of the four groups, for 8 months during which the intervention was applied, and then for 6 months after all of the interventions were removed.

This arrangement allowed the researchers to compare fuel use between the groups, but also within each group over time. That is, not only could they compare (say) how the control group did relative to the information group during the eight-month intervention period, but they could also compare whether behaviors changed from the pre-intervention period to after the intervention had been applied.

Because no intervention was applied to the control group, common sense would suggest that the behaviors for the pilots in that group wouldn’t change much. In this case, common sense was wrong: behaviors in the control group improved remarkably.

The researchers attributed this improvement to the Hawthorne effect — that people tend to behave differently when they know they’re being observed. In this case, the Hawthorne effect was far, far larger than the effects of any of the nudges.

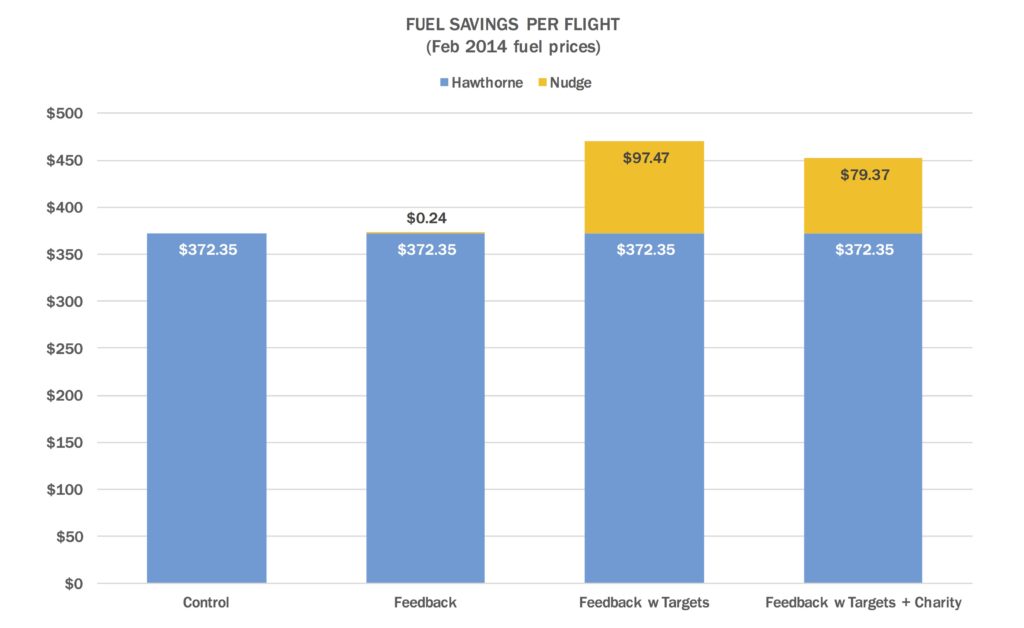

Here’s a chart I put together from data reported in the researchers’ published study. It shows the per-flight savings (in dollars) for each of the four groups, split between the Hawthorne effect and the nudging effect.

Of note, current jet fuel prices are quite a bit lower today than they were in February 2014. IATA reports that the current (as of 24 June 2016) fuel price is $457 per metric ton instead of the $768 per ton used in the study. At these prices, the Hawthorne effect is worth about $217 per flight, with the optimal nudge (feedback with target) providing savings of about $57 per flight.

Some nudge fans might argue that making a distinction between the Hawthorne effect and the other interventions is a mistake; the Hawthorne effect should be counted as a nudge. In a sense, it could be a nudge; pilots were free to either change or not change their fuel use behaviors. The nudge (they might say) was simply to make it clear to the pilots that their individual behaviors were being monitored.

I find this interpretation less than compelling for one simple reason: the study design simply doesn’t allow us to conclude that the improvement in the control group represents a Hawthorne effect at all. All of Virgin Atlantic’s pilots were enrolled into the experiment, so we don’t have any pilots who weren’t exposed to the “monitoring.”

The only thing that the study can tell us is that something changed around the time the experiment began, and that it affected the control group (and presumably the other groups as well). So even if we all agreed that the Hawthorne effect counts as a nudge, the design of the study does’t allow us to know whether what we observed is really a Hawthorne effect.

* * *

There’s a reason nudge interventions are called nudges: they generally don’t yield gigantic changes in behavior… at least not without major investments. (The most powerful design approaches are to change the default behavior and offer the opportunity to opt out, or to implement active choice. Putting either of these strategies to work involves a lot of effort and process reengineering.)

Although nudges aren’t magic, they are often highly cost effective. That is, they often yield effects that are outsized relative to their costs. And so it is with Virgin Atlantic’s nifty experiment.